How Tolan builds voice-first AI with GPT-5.1

TL;DR

Tolan is a voice-first AI companion that uses GPT-5.1 to enable natural, open-ended conversations with animated characters. Key innovations include real-time context reconstruction, vector-based memory systems, and character-driven design for consistent personality.

Key Takeaways

- •GPT-5.1's improved steerability and latency enabled Tolan to create more responsive voice interactions with consistent character personalities.

- •Tolan rebuilds context from scratch each turn using summaries, persona cards, and vector-retrieved memories to handle conversational volatility.

- •A memory system using text embeddings and high-speed vector databases retains emotional cues and facts, with nightly compression to maintain quality.

- •Voice AI requires sub-second latency and adaptive systems that can pivot mid-conversation to feel natural and engaging.

- •Tolan's architecture principles include designing for conversational shifts, treating latency as critical, and using retrieval-based memory rather than large context windows.

Tags

Tolan(opens in a new window) is a voice-first AI companion where people talk with a personalized, animated character that learns from conversations over time.

Built by Portola, a veteran team with a prior exit, the app is designed for ongoing, open-ended dialogue rather than quick prompts and replies. “We saw the rise of ChatGPT and knew voice was the next frontier,” says Quinten Farmer, co-founder and CEO of Portola. “But voice is harder. You’re not just responding to typed prompts; you’re holding a live, meandering conversation.”

Voice AI raises the bar on latency and context management, but it also enables more open-ended, exploratory interactions than text.

With foundation models becoming faster, cheaper, and more capable, the team focused their efforts on two key levers: memory and character design. Portola built a character-driven universe, shaped by award-winning animators and a science fiction writer, using a real-time context management system to keep personality and memory consistent as conversations unfold.

The release of the GPT‑5.1 models marked a turning point, delivering major gains in steerability and latency that brought those pieces together, unlocking a more responsive and engaging voice experience.

“GPT-5.1 gave us the steerability to finally express the characters we had in mind. It wasn’t just smarter—it was more faithful to the tone and personality we wanted to create.”

Designing for natural voice interactions

Tolan’s architecture is shaped by the demands of voice. Voice users expect instant, natural responses, even when conversations shift midstream. Tolan had to respond quickly, track changing topics, and maintain a consistent personality without lag or tone drift.

To feel natural, conversations required near-instant latency. Introducing OpenAI GPT‑5.1 and the Responses API cut speech initiation time by over 0.7 seconds—enough to noticeably improve conversational flow.

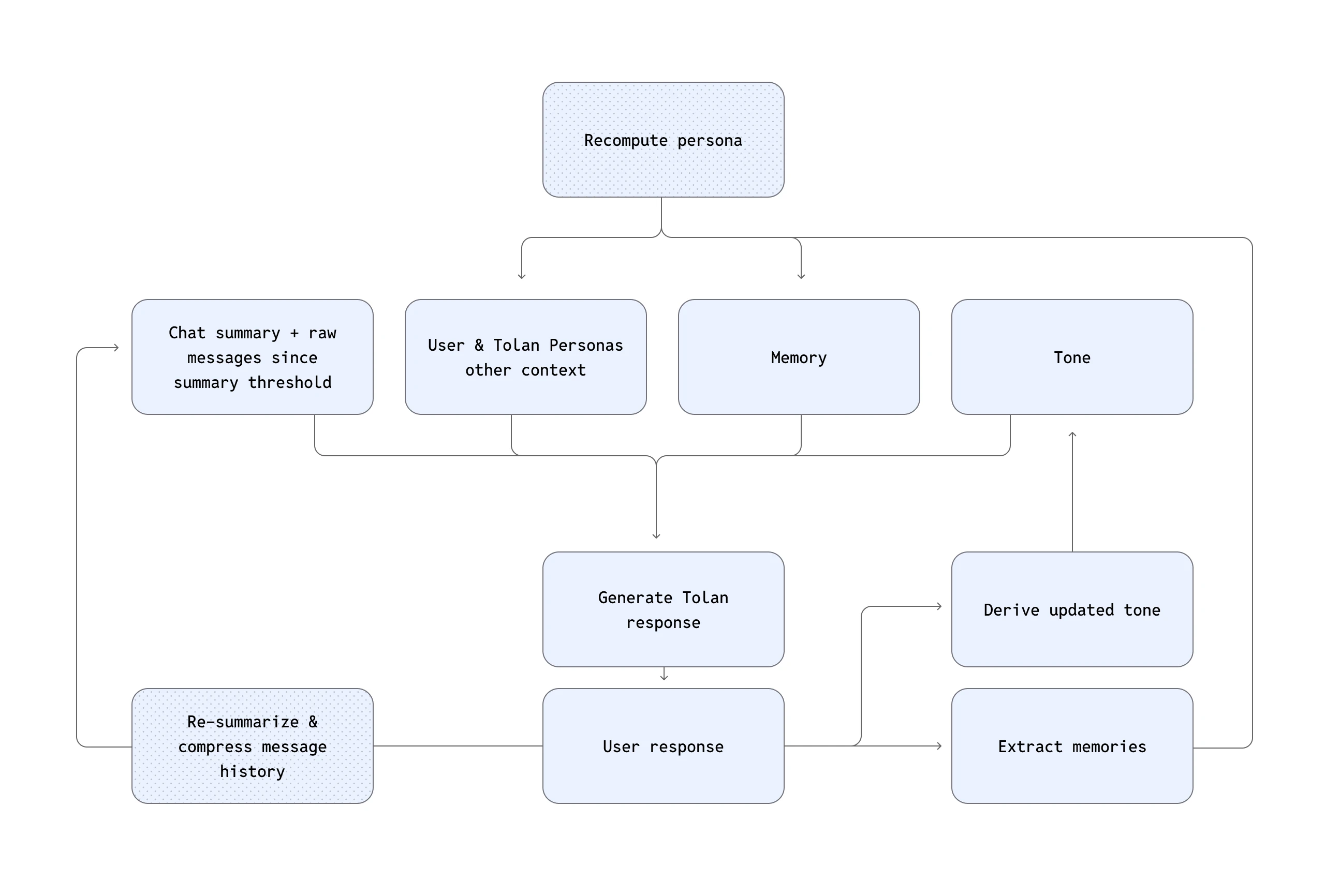

Equally critical was how the system handled context. Unlike many agents that cache prompts across multiple turns, Tolan rebuilds its context window from scratch each turn. Each context reconstruction pulls in a summary of recent messages, a persona card, vector-retrieved memories, tone guidance, and real-time app signals. This architecture allows Tolan to adapt in real time to abrupt topic shifts, an essential requirement for natural voice-based interaction.

“We realized quickly that cached prompts just didn’t cut it,” says Quinten. “Users change subjects all the time. To feel seamless, the system had to adapt midstream.”

This real-time reconstruction approach is both technically intensive and foundational to Tolan’s success.

Building memory and personality that hold together over time

Context handling is important, but it wasn’t enough to keep conversations feeling coherent over time. To support long, nonlinear conversations, Tolan built a memory system that retains not just facts and preferences, but also emotional “vibe” signals—clues that help steer how a Tolan should respond.

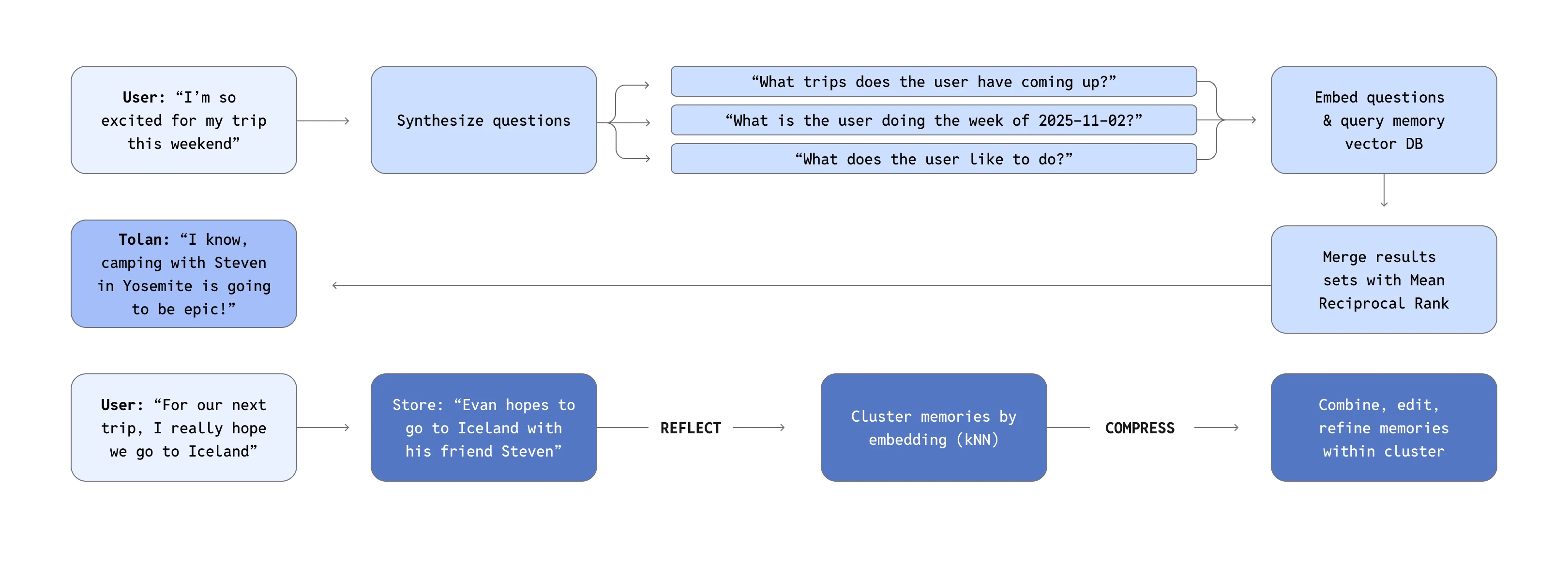

Memories are embedded using the OpenAI text-embedding-3-large model and stored in Turbopuffer, a high-speed vector database that enables sub-50ms lookup times. This speed is essential for real-time voice interactions. Each turn, Tolan uses the user’s latest message and system-synthesized questions (e.g., “Who is the user married to?”) to trigger memory recall. To keep memory quality high, Tolan runs a nightly compression job that removes low-value or redundant entries (e.g. “the user drank coffee today”) and resolves contradictions.

Personality is just as carefully managed. Each Tolan is seeded with a distinct character scaffold, authored by the team’s in-house science fiction writer and refined by a behavioral researcher. These seeds give Tolans consistency, but also flexibility to adapt over time, evolving alongside the user.

A parallel system monitors the emotional tenor of the conversation and dynamically adjusts the Tolan’s delivery. This allows a Tolan to shift seamlessly from playful to grounded depending on user cues, without losing its core personality.

The transition to GPT‑5.1 was a turning point. Suddenly, layered prompt instructions—tone scaffolds, memory injections, character traits—were followed more faithfully. Prompts that once required workarounds began behaving as intended.

“For the first time, our internal experts felt like the model was really listening,” says Quinten. “Instructions stayed intact across long conversations, persona traits were respected, and we saw far less drift.”

Those changes added up to a more consistent and believable personality, which in turn created a more engaging user experience. The Tolan team saw clear, measurable gains: memory recall misses dropped by 30% (based on in-product frustration signals), and next-day user retention rose more than 20% after GPT‑5.1–powered personas went live.

Tolan’s core principles for building natural voice agents

As Tolan evolved, a few principles emerged that now guide how the team builds and evolves its voice architecture:

- Design for conversational volatility: Voice conversations shift mid-sentence. Systems need to pivot just as quickly to feel natural.

- Treat latency as part of the product experience: Sub-second responsiveness shapes whether a voice agent feels conversational or mechanical.

- Build memory as a retrieval system, not a transcript: High-quality compression and fast vector search deliver more consistent personality than oversized context windows.

- Rebuild context every turn: Don’t fight drift with bigger prompts. Regenerating context each turn keeps agents grounded as conversations meander.

Together, these lessons form the foundation for Tolan’s next phase of innovation and set the direction for where voice AI is headed.

Expanding what’s possible with voice AI

Since launching in February 2025, Tolan has grown to more than 200,000 monthly active users. Its 4.8-star rating and more than 100,000 App Store reviews highlight how well the system maintains consistency across long, shifting conversations. One reviewer noted, “They remember things we talked about two days ago and they bring it back into the conversation that we’re having today.”

These signals map directly to the underlying architecture: low-latency model calls, turn-by-turn context reconstruction, and modular memory and persona systems. Together, they allow Tolan to track topic changes, preserve tone, and keep responses grounded without relying on large, fragile prompts.

Looking ahead, Tolan plans to deepen its investments in steerability and memory refinement, focusing its efforts on tighter compression, improved retrieval logic, and expanded persona tuning. The long-term goal is to expand what a voice interface can be: not just responsive, but context-aware and conversationally dynamic.

“The next frontier,” says Quinten, “is building voice agents that aren’t just responsive, but truly multimodal, able to integrate voice, vision, and context into a single, steerable system.”